Exzeb: Artism Act II

Digital art and interactive storytelling applied to a live dance performance using Kinect motion capture and video mapping. A collaboration with Exzeb Dance Company.

Pushing boundaries, Artism offers an exhilarating perspective of performing arts able to move traditional and new audiences. UNIT9 worked with Exzeb Dance Company, merging pure classical dance techniques and new technologies to extend the stage and elevate the performance to a new dimension. Keeping the dance at heart, we used innovation as an instrument, and as a movement itself.

Artism is a whimsical triptych proposing new approach to dance, exploring the notion from philosophical, sociological or artistic point of view. In 2011, Exzeb presented Act I as the “uncompromising documentary” of the dancer. Encompassing text, voiceover and digital projections it gave voice to the one who’s often anonymous.

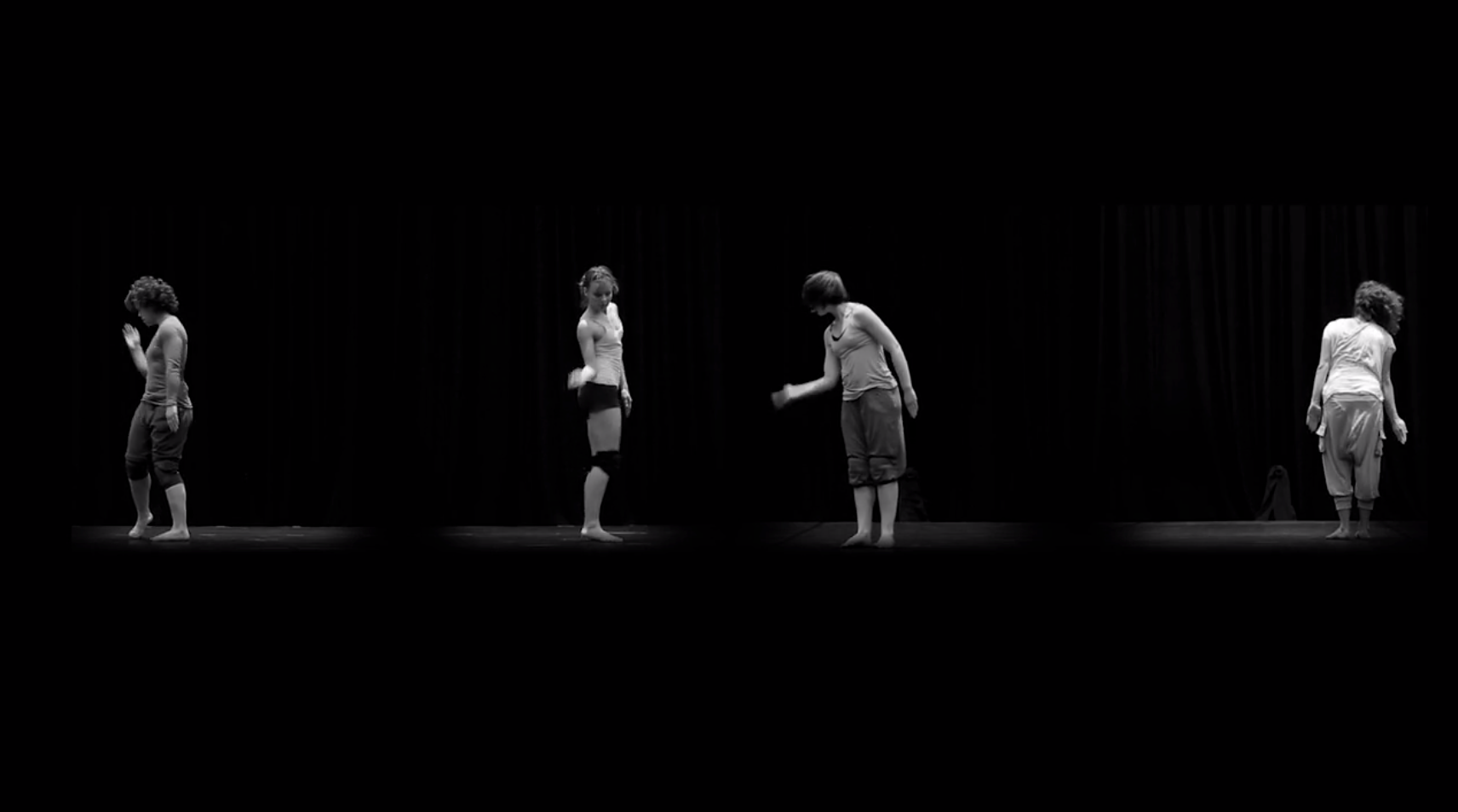

Artism Act II is a “study” of movement . Movement seen as concept, theory and perception. Eric Nyira, Exzeb founder and choreographer, wanted to present to the audience with 4 entirely new perspectives, to showcase movement as never-seen before, through digital art and interactive storytelling. This is where our talented team stepped in.

The biggest challenge for the team was to find the place of technology in the narration without eclipsing the subject. With the ambition to transform dance on stage, we wanted digital to accompany the choreography and serve its message, rather than stealing the leading role.

“Eric Nyira’s choreography was inspired by the concept that movement in its entirety is abstract. We wanted the technology to serve the art, but when you think about it, digital is abstract as well, and the art served the technology just as much. In the end we managed to achieve a complete symbiosis between dance, music and technology, and the result is truly spectacular.” says Sophie Langohr, Visual Lead.

To achieve this the team had to step out of our comfort zone. They had to not only understand the technology and be smart about the challenges that came with it, but at the same time, immerse in the artistic vision.

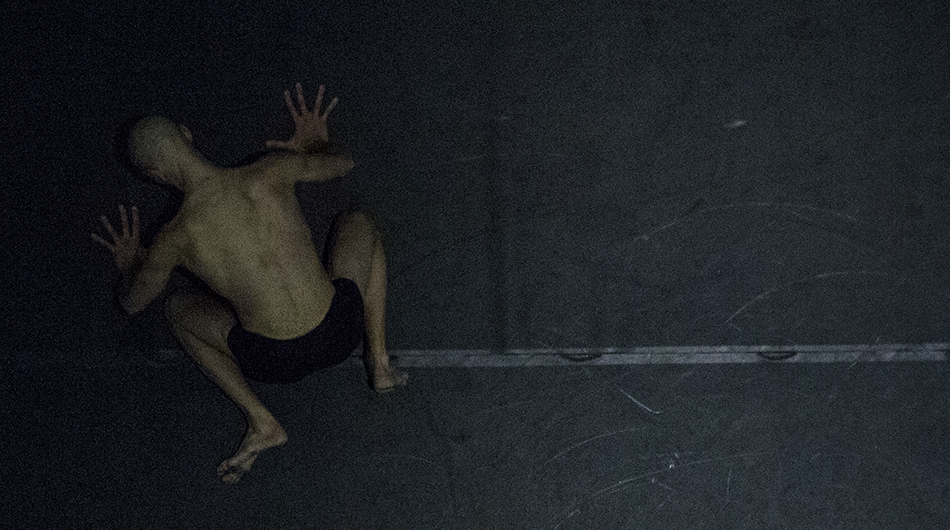

Artism has four parts, 4 percepts of movement. Percept I is about movement in its purest form. One dancer performs solely on stage, bare of any artifice. There is no visuals, no music, no intricate lighting.

Percept II shows movement in it’s entirety. Movement contains a three dimensional aspect impossible to visualise, to perceive as a spectator. There is always something that will remain hidden from the human eye. With 360-degree videos we ensured there were no blind spots.

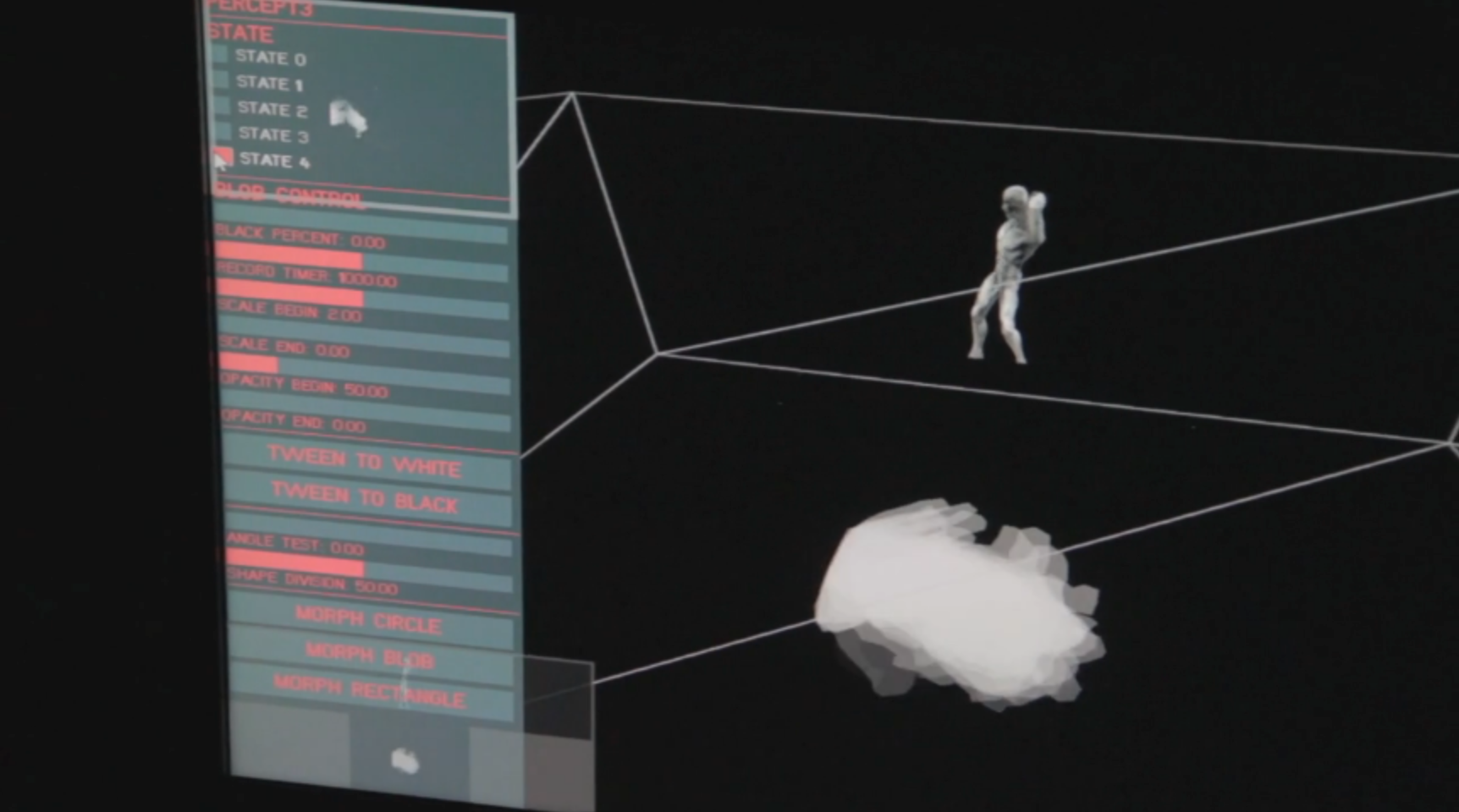

Percept III explores the relevance of evolution in movement. To complement the performance we created a 3D model of David projected on the back wall, which not only mirrored the movement of the dancer but morphed from human into chimp when he kneels, and back to human when he gets up.

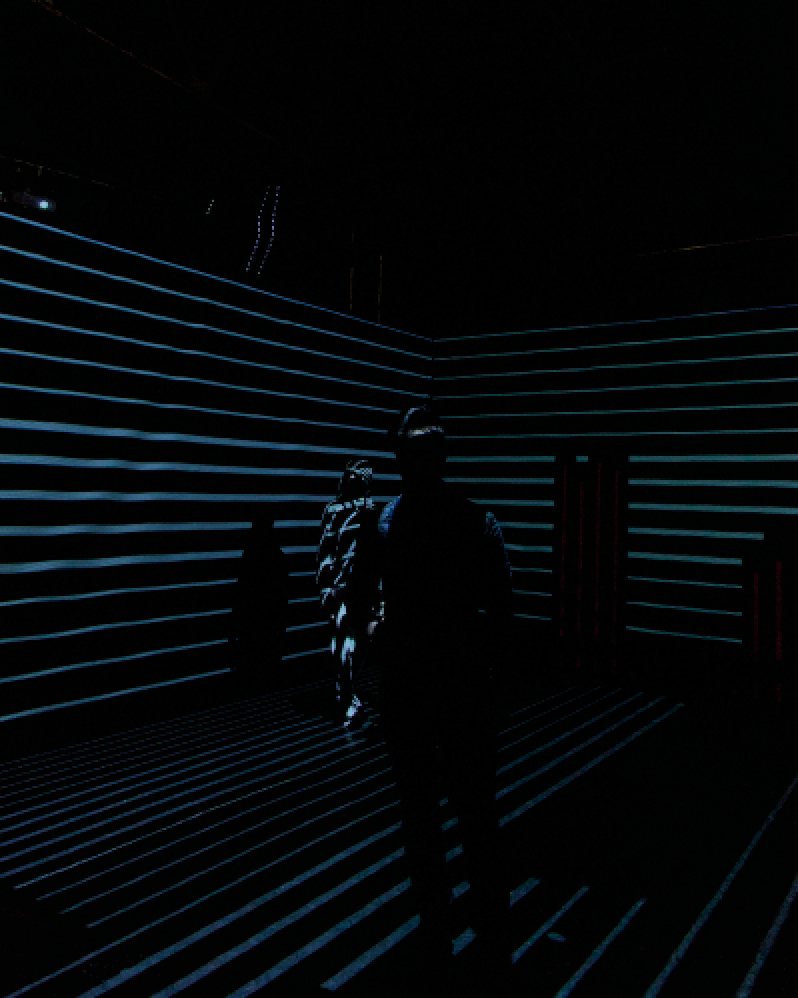

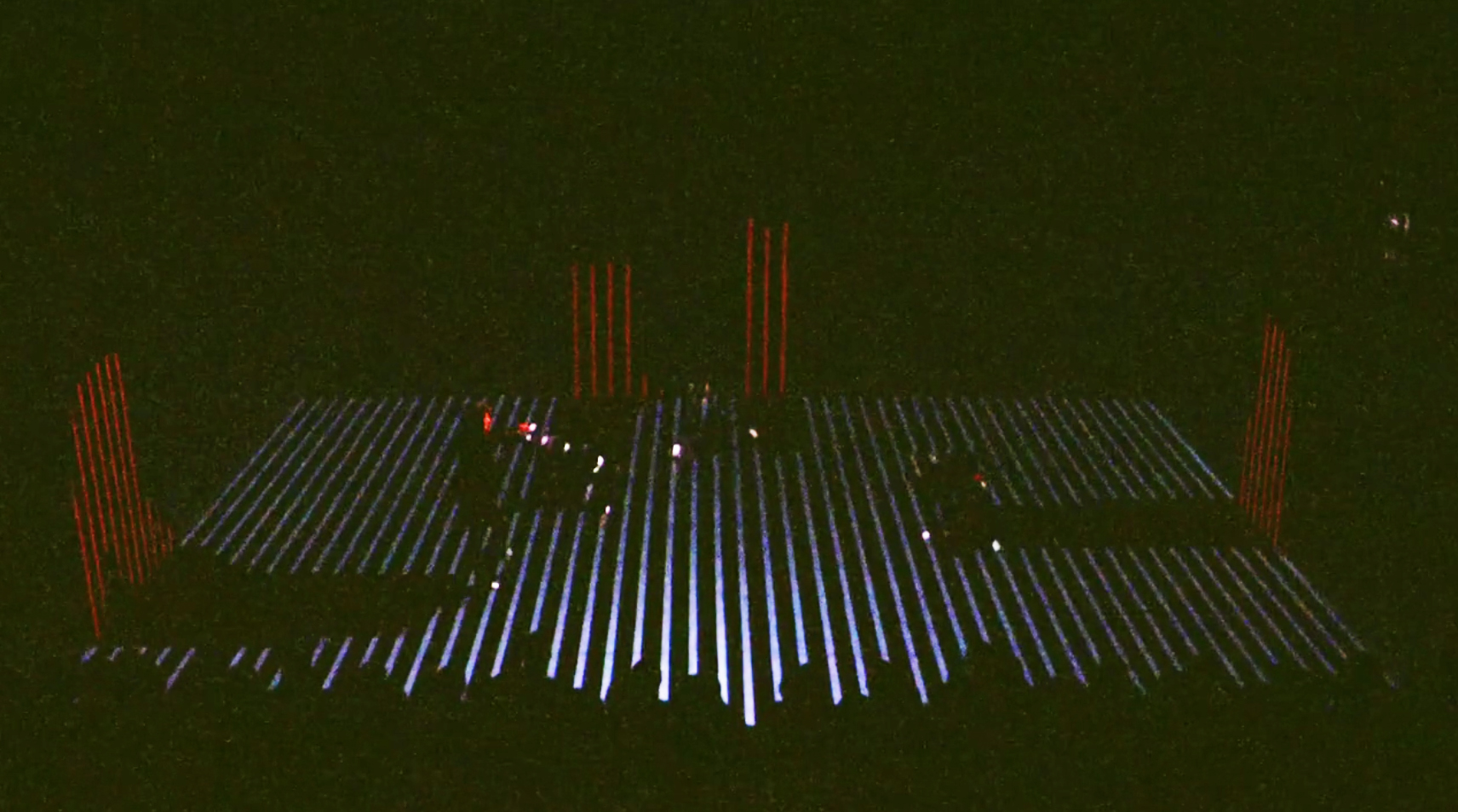

Percept IV is the culmination, when movement becomes its own entity, separate from the human body. Through video mapping , Kinect tracking and projection, every movement of the performers is a trigger for a change on stage. The stage responds to the fluidity of the performers’ movements, reacting to the velocity, speed and dynamism of their bodies.

Technical Breakdown

We used visuals, sound, motion and storytelling to lead the audience through the performance. With each percept we introduced a new form of digital art or interactive storytelling. One which best serves the choreography’s narrative, culminating with the stage coming to life in an absolute interlacement of dance and technology, powered by Kinect motion capture and video mapping.

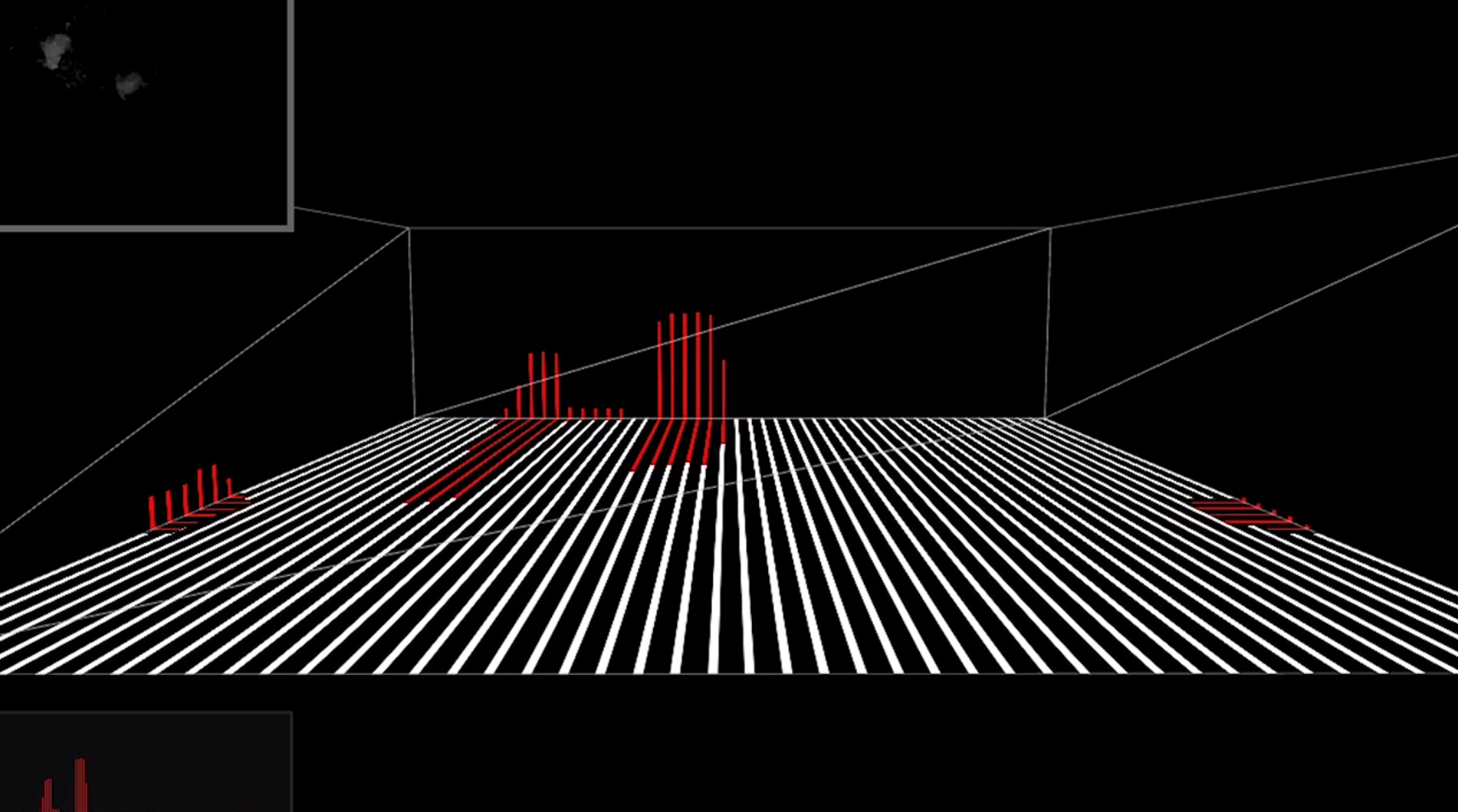

“To create such a show we needed a software that is versatile enough to allow us to play everything in one go. We needed: output to 6 displays, the 5 projectors and 1 monitor to control the show; projection mapping, or rather digital keystoning; live tracking of multiple dancers, playing multiple videos all over the stage; generative visuals based on dancers’ movement; playing the show’s music; and efficiency so that we can set it up very quickly.” explains Dimitris Doukas, Tech Lead.

Since there wasn’t such a powerful tool, the team developed their own using OpenFrameworks. The Timeline Software enabled the team to run the entire show with just a press of a button, ensuring a smooth continuum.

Visualisation Tool

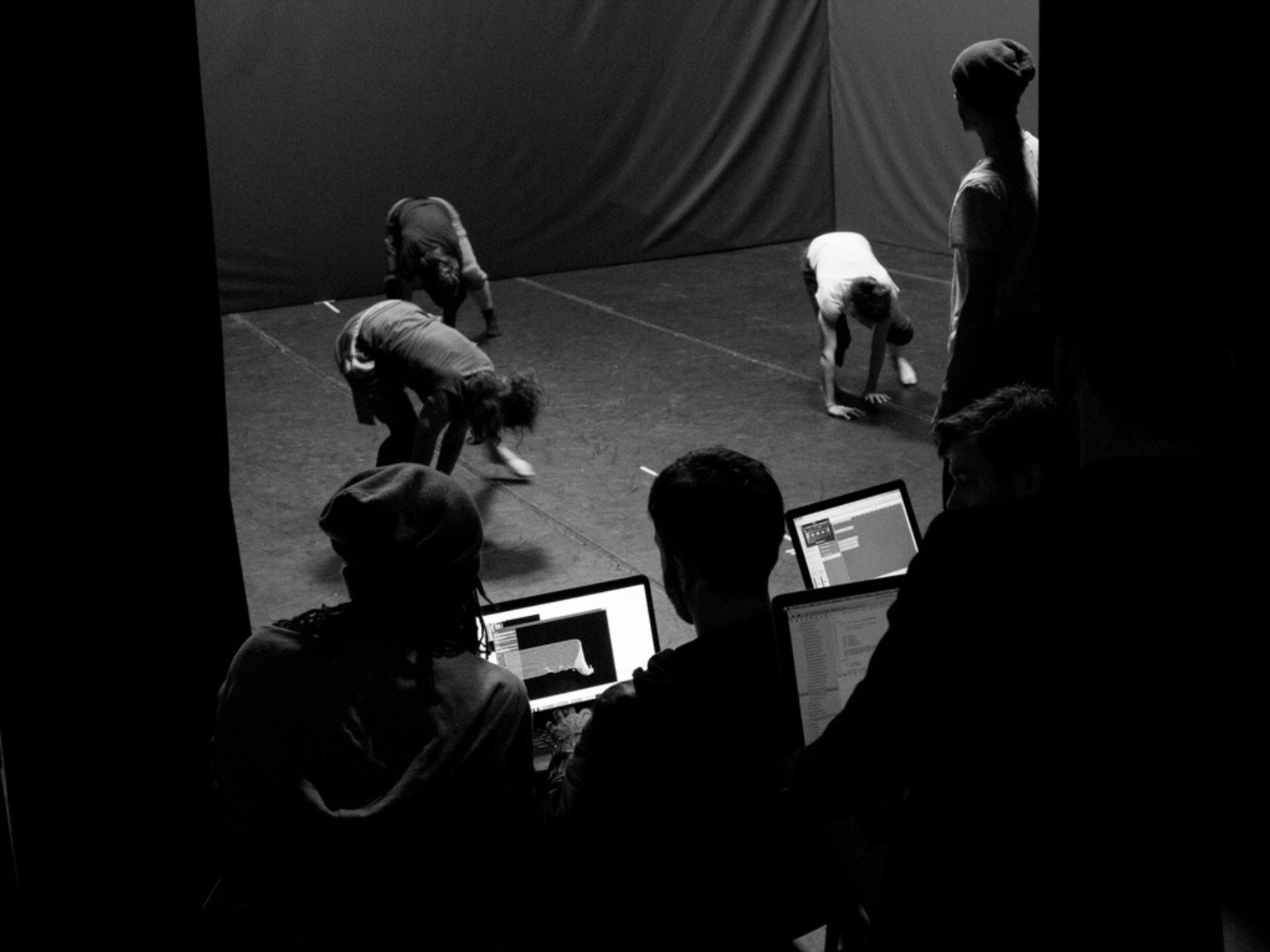

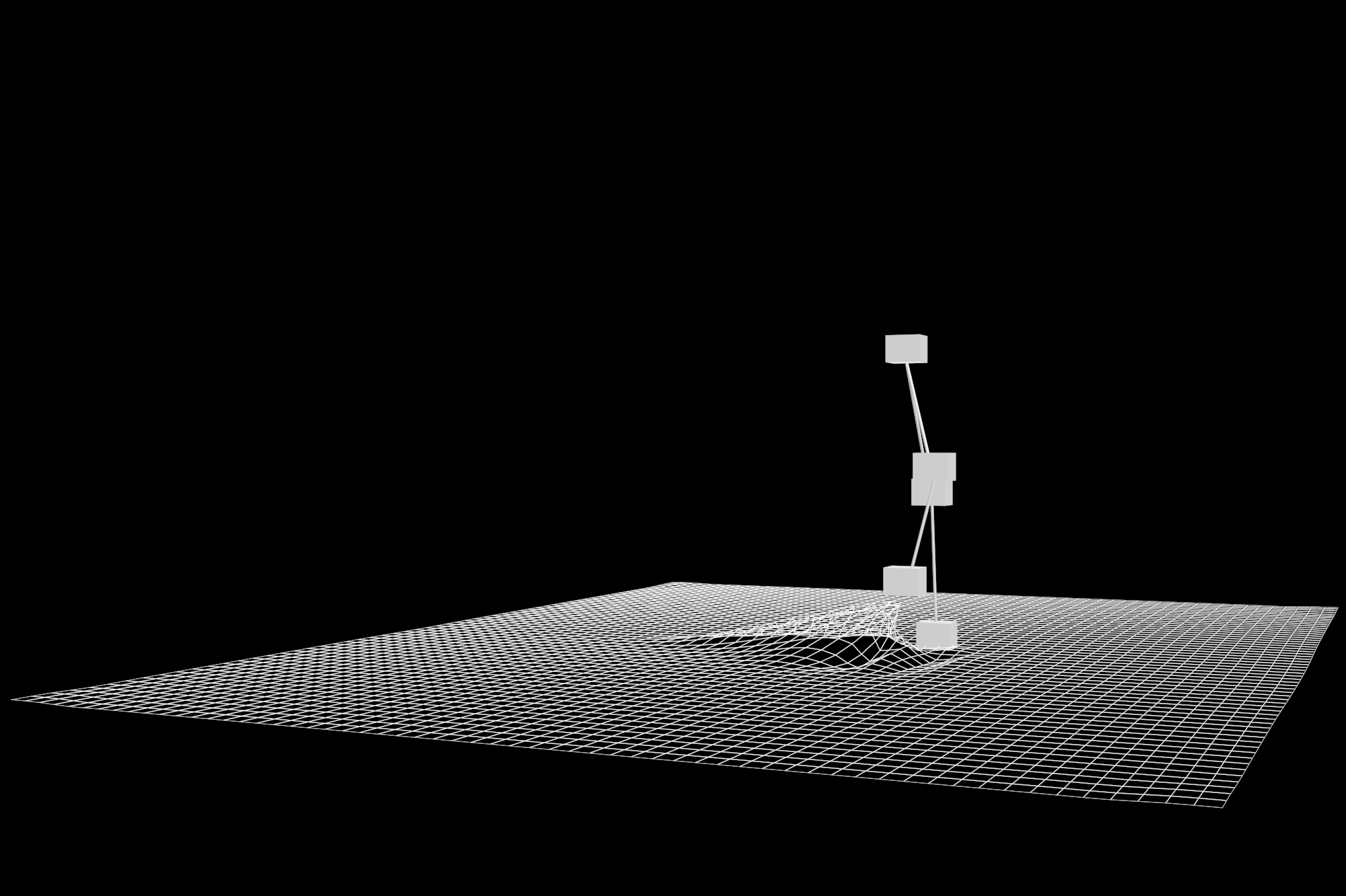

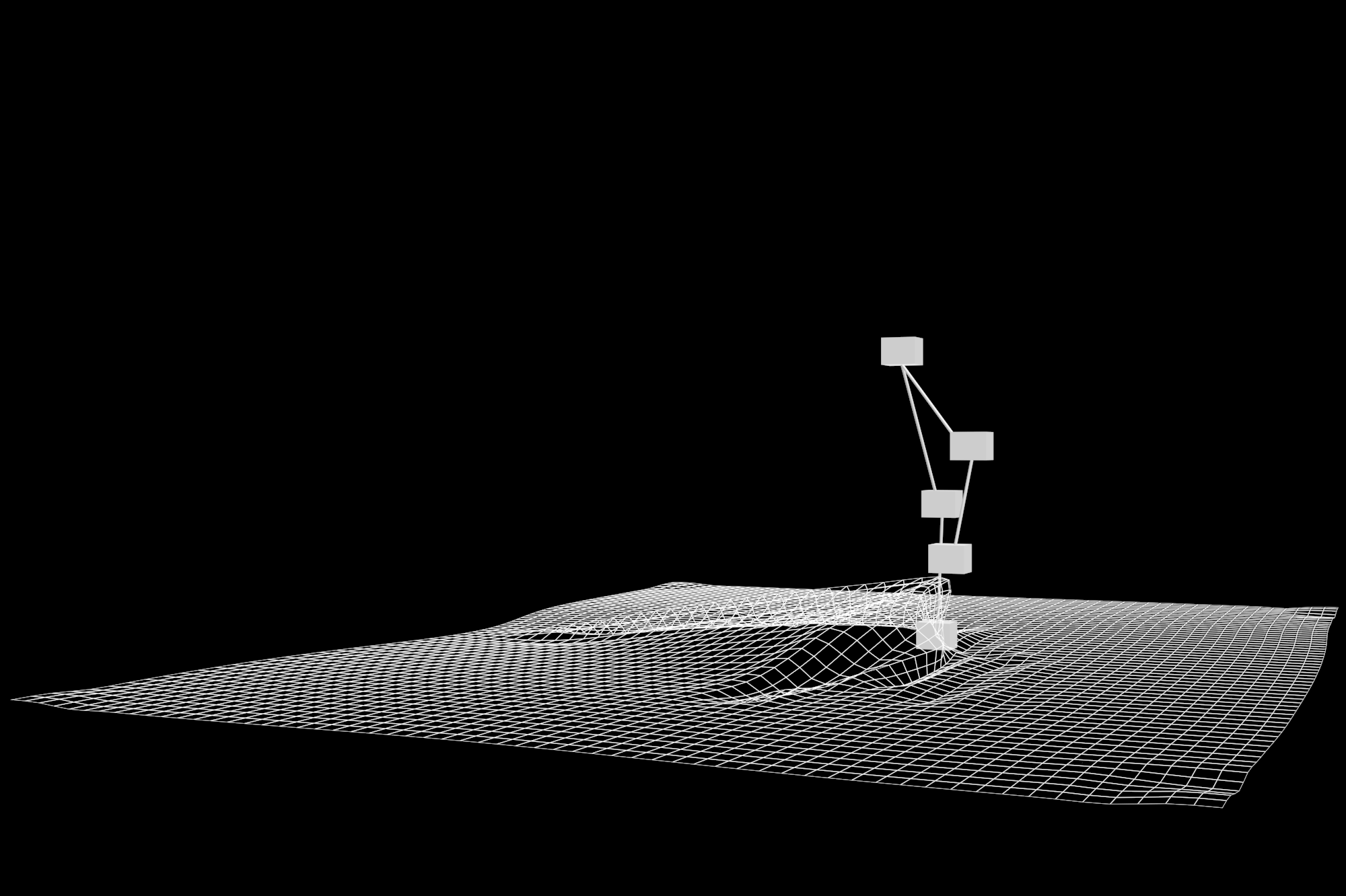

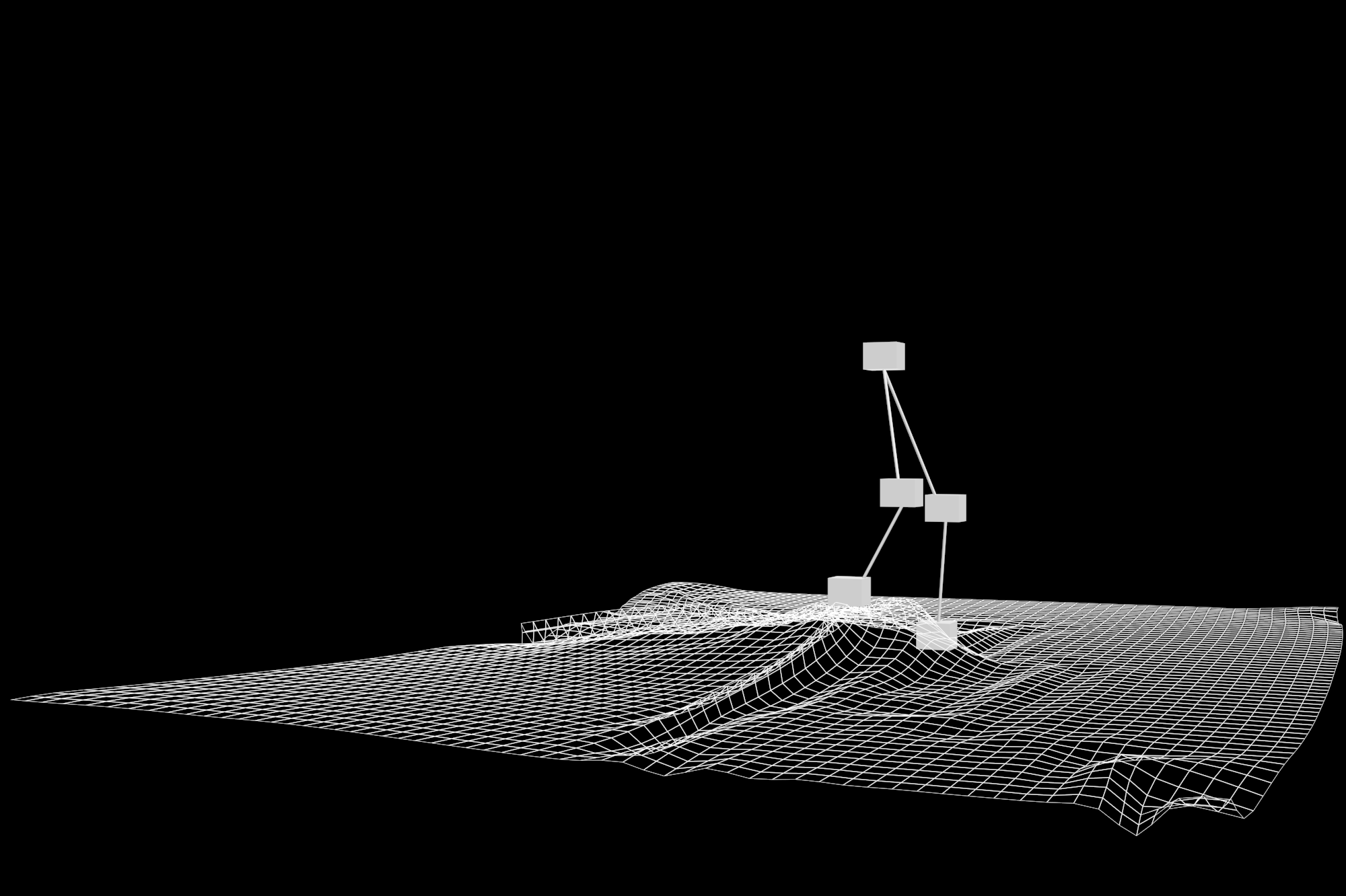

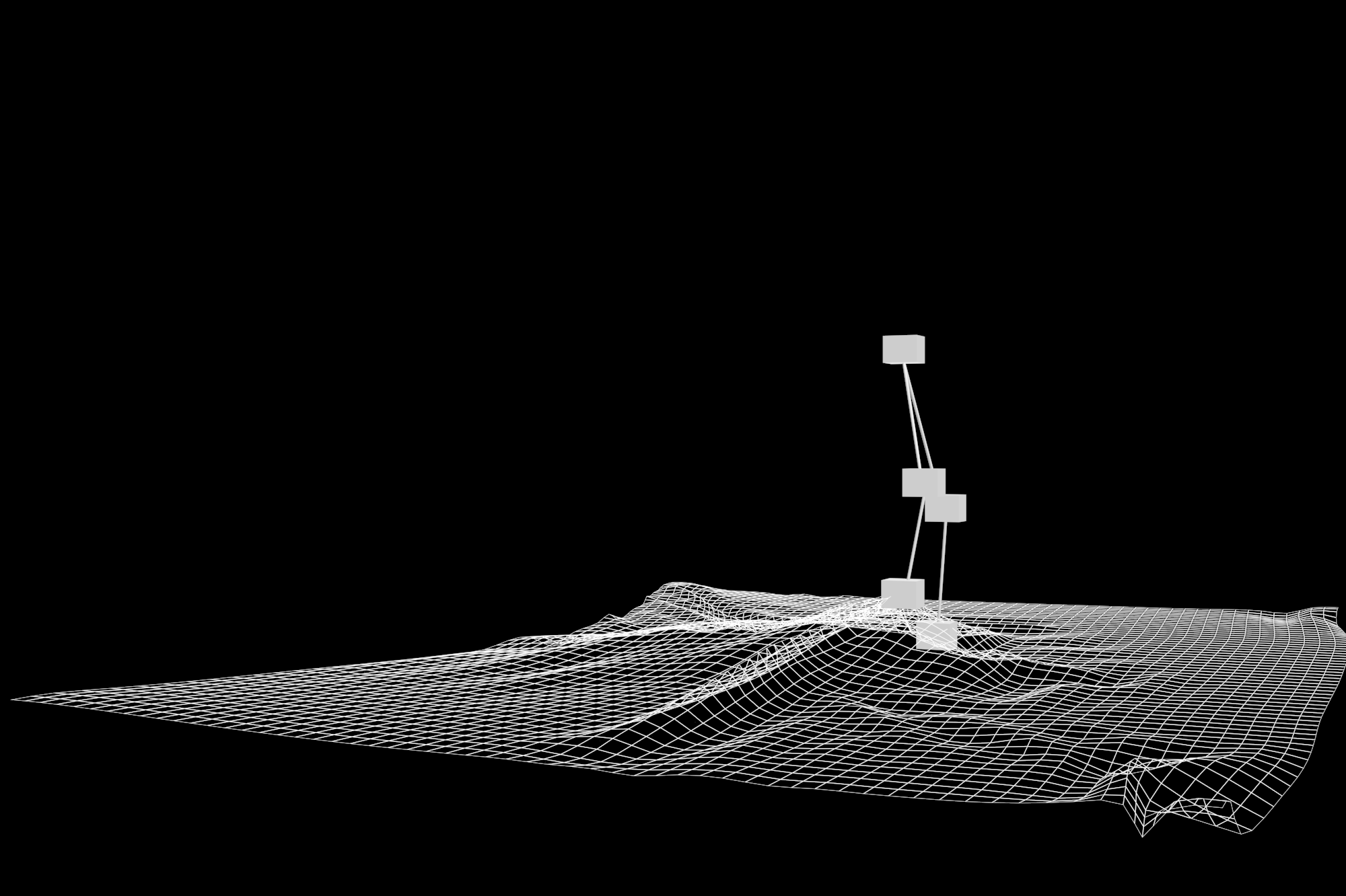

The team was working on very tight schedule – the whole performance was created in just 2 months. With only few chances to rehearse on the actual stage, they needed something that would not only enable them to visualise the setting, but would give the choreographer an understanding of how the visuals would like.

So, they created their own 3D environment – a virtual stage, that they could assess on desktop or tablet.

To power the interactive environment we used a mix of different techniques: masking projection, lighting projection, video mapping and tracking projection.

An infrared camera calculates the dancer’s velocity, speed and intensity, the fluctuating data received will draw the contour of the mapping. The Kinect then isolates the skeleton which will be used as a projection’s surface. A configuration file is then created which is then used by the visualization tool to get the information.

“For the projection mapping all we needed really was digital keystoning as we were projecting on a flat surface. We set up the scene with 6 displays in mind and created a Frame Buffer Object for each display which we wrapped to fit the edges of our projector’s throw. This gave us the advantage of calibrating the projectors from the computer.”

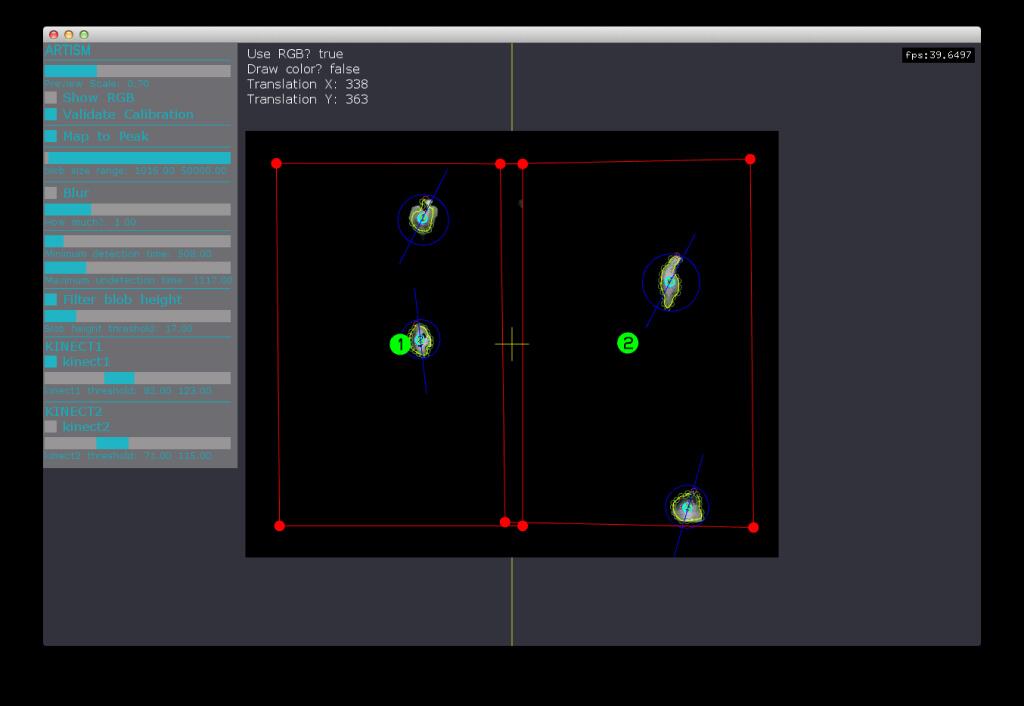

Kinect

“To be able to track dancers on the whole stage we had to use two Kinects. We created a tool that allowed us to quickly calibrate our Kinects, merge their feeds, map the merged feed to our stage floor, process and filter it and extract dancer information.” Dimitris explains.

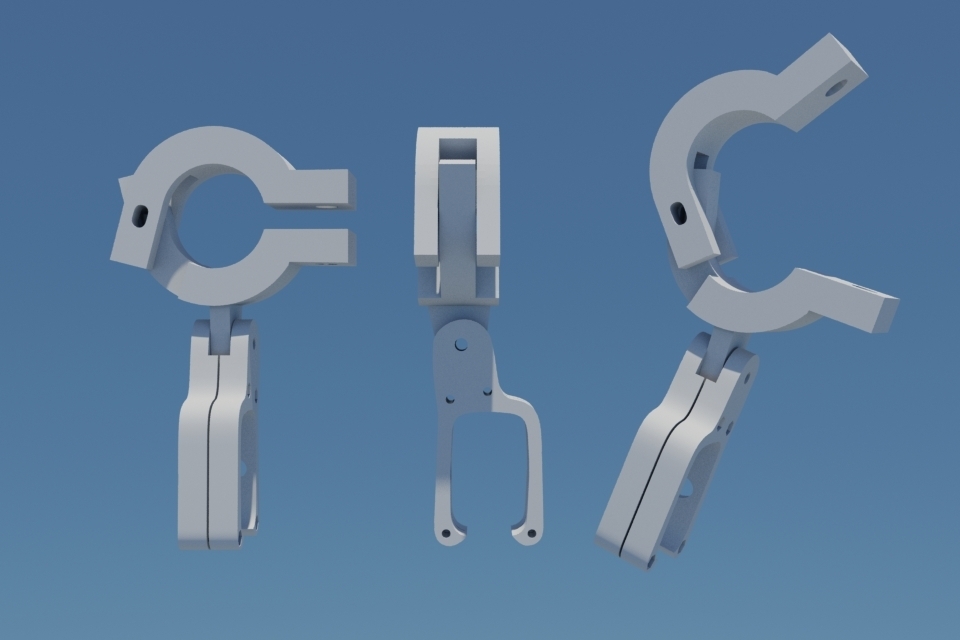

The stage is divided in two equal rectangles each covered by a Kinect, positioned 6 meters above the platform. Attaching the Kinects to the rig above the stage was a challenge on its own. The team designed and 3D printed custom kinect mounts that allow them to to easily rig and adjust the Kinects.

360-degree Filming

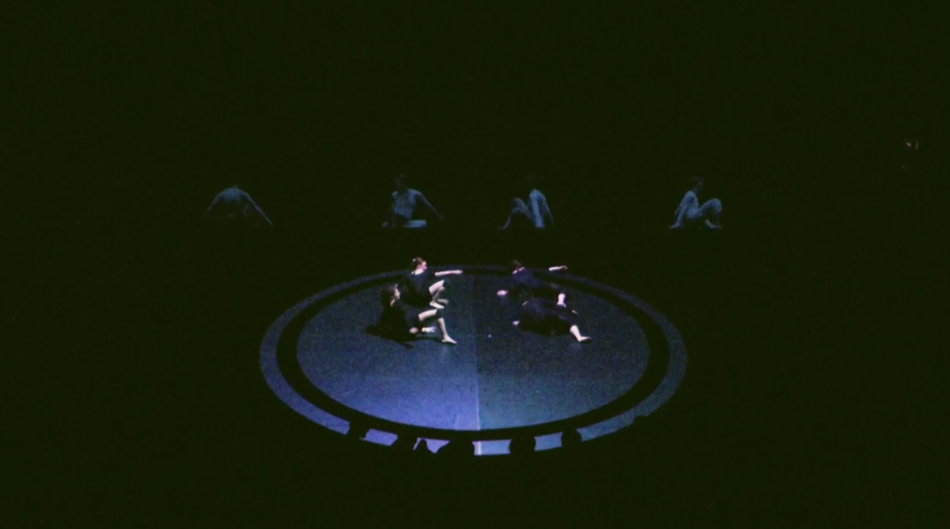

For the Percept II, Eric wanted to show movement in a new perspective – in it’s entirety, leaving no blind spots. We had 4 dancers dancing on stage and for each we projected a 360-degree video on the wall behind them, showing the dance from each possible able.

To achieve the 360-degree effect we filmed with 8 cameras arranged in circle, 10m in diameter, with each dancer performing the choreography in the middle. Then the footage was animated and edited on various bpm to match both the rhythm of the music and the choreography.

“Not only the point of view affects the perception, but also time. We changed the point of view with the music timing. Then we did it on each ½ time, then ¼, ⅛, and 1/16 speeding the footage as the choreography accelerated.” says Sophie.

Motion Capture

To make Percept III possible we used motion capture to make sure the movement is perfect and the dancer and its avatar are synchronised. 64 sensors were attached on the dancers body, with nearly 160 infra red cameras on the ground and on rig surrounding the stage to get perfect capture of every movement of his body. Simultaneously the capture was applied to a 3D model, which we later just cleaned up and edited.

Using 3ds Max, placed cameras around the 3D avatar to have different point of view and edited it to ensure we have a diverse and captivated video.

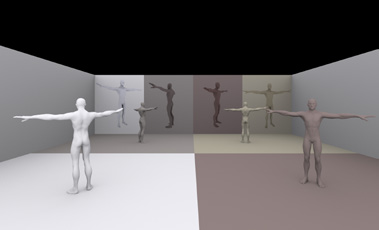

In the end, the synchronisation software we developed ensured that there is perfect alignment between choreography, music and the projection. To add more dimension, we also created an interactive environment using Kinect to track David’s movements. The spotlight, the light effects on the walls, different shadow silhouettes, all followed the dancer across the stage.

Visualisations

For the culmination, in the last Percept, the technology, dance and music all merge in one unity – one movement. Each movement of the dancers triggers a visual that’s projected on stage.

Visual Artist Bruno Imbrizi explains in more detail: “All of the visuals were generated by code, using the movement of the dancers as a source.

“We had three core scenes, with several states and variations in them to keep the visuals interesting during the 5’30” of the last Percept. The first scene was based on cloth simulation, the second was based on a grid of dots inspired by LED matrix panels and the last one was based on sound equalisers.”

Artism Act II premiered on February 13th as part of the Resolution! 2014 Festival, at The Place, London’s biggest contemporary dance stage.

Credits

-

Division

-

Brand

-

Production Company

-

Created by

-

Choreographer

-

Choreographer/Dancer

-

Dancers

-

Dancers

-

Dancers

-

Dancers

-

Executive Producer

-

Executive Producer

-

Producer

-

Tech Lead

-

Creative Lead

-

Visual Artist

-

Visual Artist

-

Production Design

-

Lighting

-

Kinect Developer

-

Kinect Developer

-

Director of Photography

-

Editor

-

Editor

-

Platform

-

Kind

-

Industry

-

Target Market

-

Release Date

2014-02-13